“In immediately’s quickly evolving digital panorama, we see a rising variety of companies and environments (by which these companies run) our clients make the most of on Azure. Guaranteeing the efficiency and safety of Azure means our groups are vigilant about common upkeep and updates to maintain tempo with buyer wants. Stability, reliability, and rolling well timed updates stay

“In immediately’s quickly evolving digital panorama, we see a rising variety of companies and environments (by which these companies run) our clients make the most of on Azure. Guaranteeing the efficiency and safety of Azure means our groups are vigilant about common upkeep and updates to maintain tempo with buyer wants. Stability, reliability, and rolling well timed updates stay our prime precedence when testing and deploying modifications. In minimizing affect to clients and companies, we should account for the multifaceted software program, {hardware}, and platform panorama. That is an instance of an optimization downside, an trade idea that revolves round discovering the easiest way to allocate assets, handle workloads, and guarantee efficiency whereas preserving prices low and adhering to numerous constraints. Given the complexity and ever-changing nature of cloud environments, this activity is each important and difficult.

I’ve requested Rohit Pandey, Principal Information Scientist Supervisor, and Akshay Sathiya, Information Scientist, from the Azure Core Insights Information Science Group to debate approaches to optimization issues in cloud computing and share a useful resource we’ve developed for purchasers to make use of to resolve these issues in their very own environments.“—Mark Russinovich, CTO, Azure

Optimization issues in cloud computing

Optimization issues exist throughout the expertise trade. Software program merchandise of immediately are engineered to operate throughout a big selection of environments like web sites, purposes, and working techniques. Equally, Azure should carry out nicely on a various set of servers and server configurations that span {hardware} fashions, digital machine (VM) sorts, and working techniques throughout a manufacturing fleet. Beneath the constraints of time, computational assets, and rising complexity as we add extra companies, {hardware}, and VMs, it might not be doable to succeed in an optimum answer. For issues corresponding to these, an optimization algorithm is used to determine a near-optimal answer that makes use of an affordable period of time and assets. Utilizing an optimization downside we encounter in organising the surroundings for a software program and {hardware} testing platform, we’ll focus on the complexity of such issues and introduce a library we created to resolve these sorts of issues that may be utilized throughout domains.

Setting design and combinatorial testing

For those who had been to design an experiment for evaluating a brand new remedy, you’ll check on a various demographic of customers to evaluate potential unfavourable results which will have an effect on a choose group of individuals. In cloud computing, we equally must design an experimentation platform that, ideally, can be consultant of all of the properties of Azure and would sufficiently check each doable configuration in manufacturing. In follow, that may make the check matrix too giant, so we have now to focus on the necessary and dangerous ones. Moreover, simply as you would possibly keep away from taking two remedy that may negatively have an effect on each other, properties inside the cloud even have constraints that should be revered for profitable use in manufacturing. For instance, {hardware} one would possibly solely work with VM sorts one and two, however not three and 4. Lastly, clients might have extra constraints that we should think about in our surroundings.

With all of the doable combos, we should design an surroundings that may check the necessary combos and that takes into consideration the varied constraints. AzQualify is our platform for testing Azure inner packages the place we leverage managed experimentation to vet any modifications earlier than they roll out. In AzQualify, packages are A/B examined on a variety of configurations and combos of configurations to determine and mitigate potential points earlier than manufacturing deployment.

Whereas it could be ideally suited to check the brand new remedy and gather knowledge on each doable person and each doable interplay with each remedy in each state of affairs, there may be not sufficient time or assets to have the ability to try this. We face the identical constrained optimization downside in cloud computing. This downside is an NP-hard downside.

NP-hard issues

An NP-hard, or Nondeterministic Polynomial Time exhausting, downside is tough to resolve and exhausting to even confirm (if somebody gave you the perfect answer). Utilizing the instance of a brand new remedy that may remedy a number of illnesses, testing this remedy includes a collection of extremely complicated and interconnected trials throughout totally different affected person teams, environments, and situations. Every trial’s consequence would possibly depend upon others, making it not solely exhausting to conduct but in addition very difficult to confirm all of the interconnected outcomes. We’re not capable of know if this remedy is the perfect nor affirm if it’s the greatest. In pc science, it has not but been confirmed (and is taken into account unlikely) that the perfect options for NP-hard issues are effectively obtainable..

One other NP-hard downside we think about in AzQualify is allocation of VMs throughout {hardware} to steadiness load. This includes assigning buyer VMs to bodily machines in a method that maximizes useful resource utilization, minimizes response time, and avoids overloading any single bodily machine. To visualise the absolute best method, we use a property graph to symbolize and remedy issues involving interconnected knowledge.

Property graph

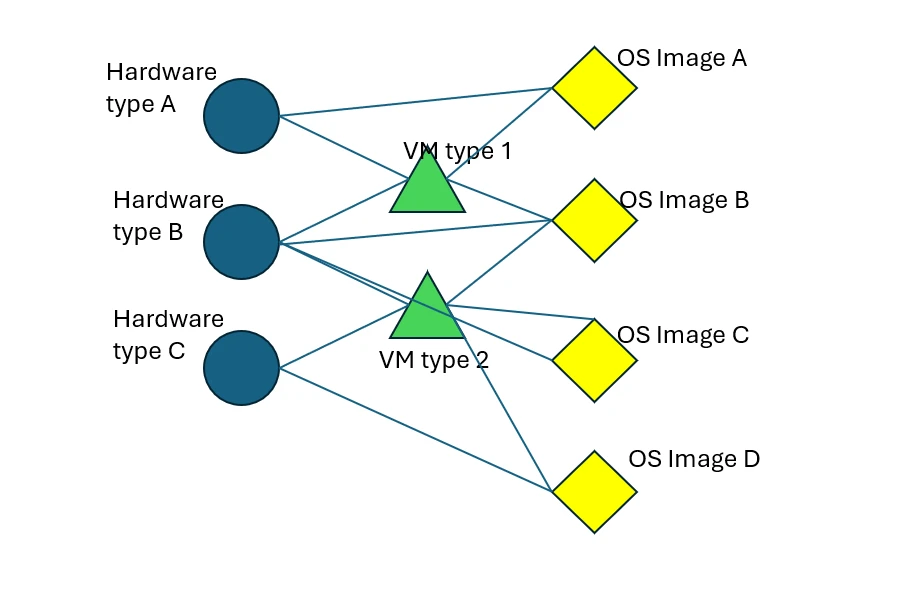

Property graph is an information construction generally utilized in graph databases to mannequin complicated relationships between entities. On this case, we will illustrate various kinds of properties with every kind utilizing its personal vertices, and Edges to symbolize compatibility relationships. Every property is a vertex within the graph and two properties may have an edge between them if they’re suitable with one another. This mannequin is very useful for visualizing constraints. Moreover, expressing constraints on this type permits us to leverage current ideas and algorithms when fixing new optimization issues.

Beneath is an instance property graph consisting of three kinds of properties ({hardware} mannequin, VM kind, and working techniques). Vertices symbolize particular properties corresponding to {hardware} fashions (A, B, and C, represented by blue circles), VM sorts (D and E, represented by inexperienced triangles), and OS photos (F, G, H, and I, represented by yellow diamonds). Edges (black traces between vertices) symbolize compatibility relationships. Vertices related by an edge symbolize properties suitable with one another corresponding to {hardware} mannequin C, VM kind E, and OS picture I.

Determine 1: An instance property graph exhibiting compatibility between {hardware} fashions (blue), VM sorts (inexperienced), and working techniques (yellow)

In Azure, nodes are bodily situated in datacenters throughout a number of areas. Azure clients use VMs which run on nodes. A single node might host a number of VMs on the similar time, with every VM allotted a portion of the node’s computational assets (i.e. reminiscence or storage) and operating independently of the opposite VMs on the node. For a node to have a {hardware} mannequin, a VM kind to run, and an working system picture on that VM, all three should be suitable with one another. On the graph, all of those can be related. Therefore, legitimate node configurations are represented by cliques (every having one {hardware} mannequin, one VM kind, and one OS picture) within the graph.

An instance of the surroundings design downside we remedy in AzQualify is needing to cowl all of the {hardware} fashions, VM sorts, and working system photos within the graph above. Let’s say we’d like {hardware} mannequin A to be 40% of the machines in our experiment, VM kind D to be 50% of the VMs operating on the machines, and OS picture F to be on 10% of all of the VMs. Lastly, we should use precisely 20 machines. Fixing how one can allocate the {hardware}, VM sorts, and working system photos amongst these machines in order that the compatibility constraints in Determine one are happy and we get as shut as doable to satisfying the opposite necessities is an instance of an issue the place no environment friendly algorithm exists.

Library of optimization algorithms

We now have developed some general-purpose code from learnings extracted from fixing NP-hard issues that we packaged within the optimizn library. Regardless that Python and R libraries exist for the algorithms we applied, they’ve limitations that make them impractical to make use of on these sorts of complicated combinatorial, NP-hard issues. In Azure, we use this library to resolve numerous and dynamic kinds of surroundings design issues and implement routines that can be utilized on any kind of combinatorial optimization downside with consideration to extensibility throughout domains. The environment design system, which makes use of this library, has helped us cowl a greater variety of properties in testing, resulting in us catching 5 to 10 regressions per thirty days. By way of figuring out regressions, we will enhance Azure’s inner packages whereas modifications are nonetheless in pre-production and decrease potential platform stability and buyer affect as soon as modifications are broadly deployed.

Study extra concerning the optimizn library

Understanding how one can method optimization issues is pivotal for organizations aiming to maximise effectivity, scale back prices, and enhance efficiency and reliability. Go to our optimizn library to resolve NP-hard issues in your compute surroundings. For these new to optimization or NP-hard issues, go to the README.md file of the library to see how one can interface with the varied algorithms. As we proceed studying from the dynamic nature of cloud computing, we make common updates to basic algorithms in addition to publish new algorithms designed particularly to work on sure courses of NP-hard issues.

By addressing these challenges, organizations can obtain higher useful resource utilization, improve person expertise, and preserve a aggressive edge within the quickly evolving digital panorama. Investing in cloud optimization isn’t just about chopping prices; it’s about constructing a strong infrastructure that helps long-term enterprise targets.