Is it only some weeks since OpenAI introduced its new app for macOS computer systems?

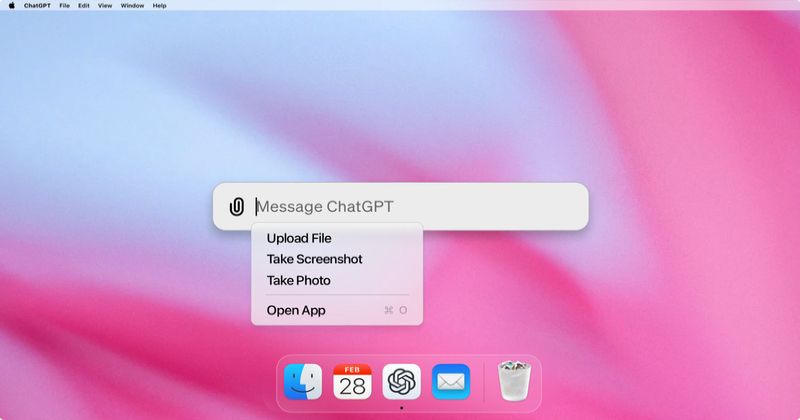

To a lot fanfare, the makers of ChatGPT revealed a desktop model that allowed Mac customers to ask questions immediately quite than by way of the net.

“ChatGPT seamlessly integrates with how you’re employed, write, and create,” bragged OpenAI.

What might presumably go unsuitable?

Properly, anybody dashing to check out the software program might have be rueing their impatience, as a result of – as software program engineer Pedro José Pereira Vieito posted on Threads – OpenAI’s ever-so-clever ChatGPT’s software program was doing one thing really-rather-stupid.

It was storing customers’ chats with ChatGPT for Mac in plaintext on their laptop. In brief, anybody who gained unauthorised use of your laptop – whether or not it’s a malicious distant hacker, a jealous accomplice, or rival within the workplace, would have the ability to simply learn your conversations with ChatGPT and the information related to them.

As Pereira Vieito described, OpenAI’s app was not sandboxed, and saved all conversations, unencrypted in a folder accessible by some other working processes (together with malware) on the pc.

“macOS has blocked entry to any person personal knowledge since macOS Mojave 10.14 (6 years in the past!). Any app accessing personal person knowledge (Calendar, Contacts, Mail, Pictures, any third-party app sandbox, and so forth.) now requires specific person entry,” defined Pereira Vieito. “OpenAI selected to opt-out of the sandbox and retailer the conversations in plain textual content in a non-protected location, disabling all of those built-in defenses.”

Fortunately, the safety goof has now been fastened. The Verge stories that after it contacted OpenAI concerning the situation raised by Pereira Vieito, a brand new model of the ChatGPT macOS app was shipped, correctly encrypting conversations.

However the incident acts as a salutary reminder. Proper now there’s a “gold rush” mentality on the subject of synthetic intelligence. Companies are racing forward with their AI developments, determined to remain forward of their rivals. Inevitably that may result in much less care being taken with safety and privateness as shortcuts are taken to push out developments at an ever-faster velocity.

My recommendation to customers is to not make the error of leaping onto each new growth on the day of launch. Let others be the primary to analyze new AI options and developments. They are often the beta testers who check out AI software program when it is most certainly to include bugs and vulnerabilities, and solely when you find yourself assured that the creases have been ironed out attempt it for your self.